The RLDM 2025 Handout provides general information for attendees.

The RLDM 2025 Abstract Booklet (click the button ‘Download anyway’) contains all abstracts accepted at the conference.

Here is a list of posters by date, with poster board numbers on the right.

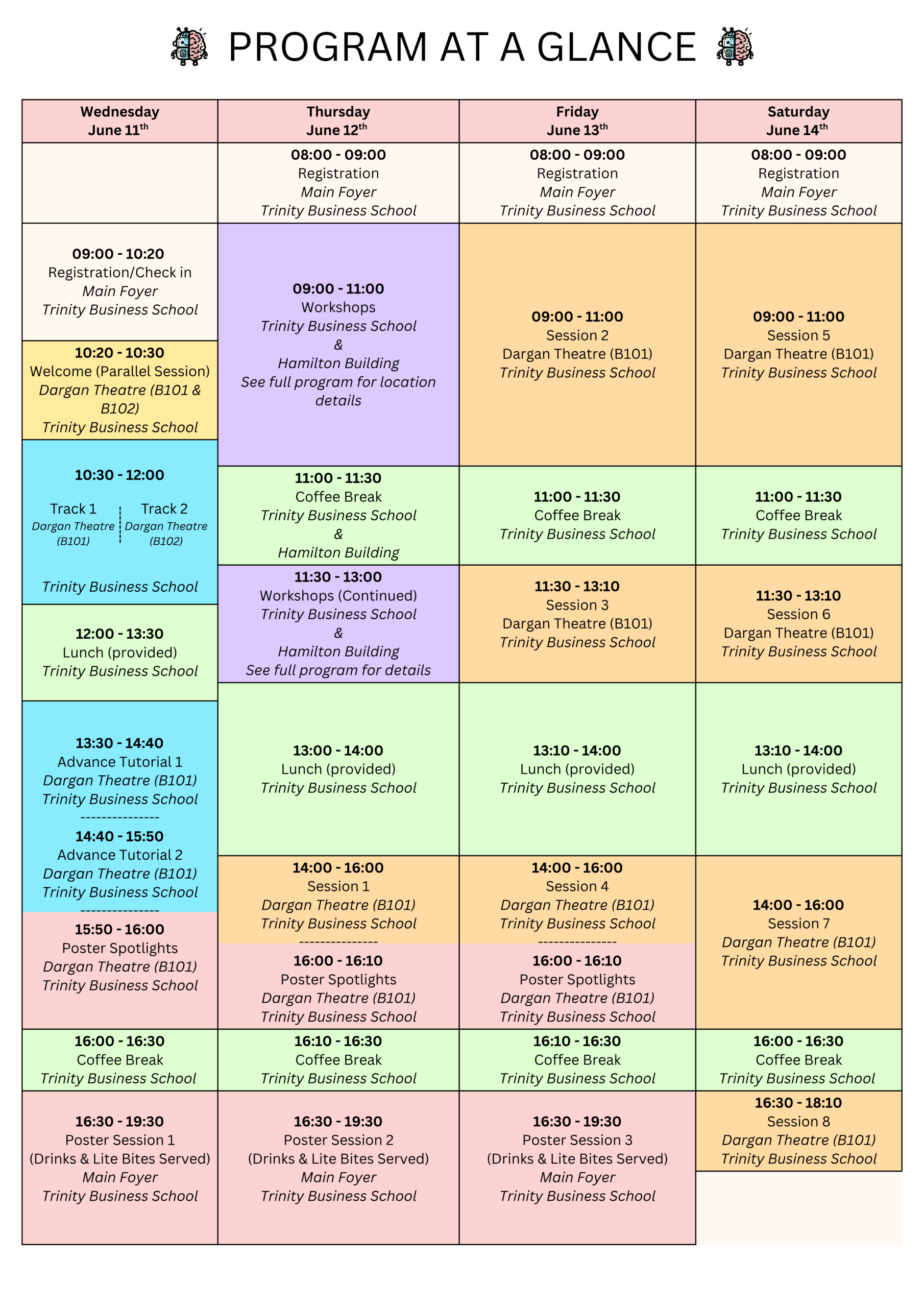

Wednesday

Introductory tutorials

- 10:20-10:30am: Welcome by Stefano Albrecht and Anne Collins (Parallel Session; Dargan Theatre B101 & B102)

- 10:30-12:00pm (Dargan Theatre B101): Thomas Akam – Brain architecture for adaptive behaviour

- 10:30-12:00pm (Dargan Theatre B102): Anna Harutyunyan – Reinforcement learning: an anti-tutorial

Advanced tutorials (session chair: Angela Langdon)

- 1:30-2:40pm: Caroline Charpentier – Leveraging individual differences in RLDM

- 2:40-3:50pm: Ben Eysenbach – Self-Supervised Representations and Reinforcement

Poster session

- 3:50-4:00pm: Poster spotlights #1

- 4:30-7:30pm: Poster session #1

Thursday

Workshops

- 9:00am-1:00pm: See Workshops

Session 1: Developmental mechanisms and exploration (session chair: Stefano Albrecht)

- 2:00-2:40pm: Cate Hartley and Michael Littman – What you need when you need it is all you need

- 2:40-3:20pm: Andreas Krause – Uncertainty-guided Exploration in Model-based Deep Reinforcement Learning

- 3:20-4:00pm: Malcolm MacIver – The Geological Basis of Intelligence

Poster session

- 4:00-4:10pm: Poster spotlights #2

- 4:30-7:30pm: Poster session #2

Friday

Session 2: Uncertainty and exploration (session chair: Bob Wilson)

- 9:00-9:40am: Claire Vernade – Partially Observable Reinforcement Learning with Memory Traces

- 9:40-10.00am: Kelly Zhang et al. – Informed Exploration via Autoregressive Generation

- 10:00-10:20am: Janne Reynders et al. – Cognitive mechanisms of strategic variability in stable, volatile, and adversarial environments

- 10:20-11:00am: Romy Froemer – Attention in value-based choice: Active and passive uncertainty reduction mechanisms

Session 3: Multi-agent interaction (session chair: Redmond O’Connell)

- 11:30-12:10pm: Sam Devlin – Towards Human-AI Collaboration: Lessons Learnt From Imitating Human Gameplay

- 12:10-12:30pm: Sonja Johnson-Yu et al. – Investigating active electrosensing and communication in deep-reinforcement learning trained artificial fish collectives

- 12:30-1:10pm: Amanda Prorok – Synthesizing Diverse Policies for Multi-Robot Coordination

Session 4: Modeling the world and state representations (session chair: Matt Nassar)

- 2:00-2:40pm: Tim Rocktaeschel – Open-Endedness and World Models

- 2:40-3:00pm: Matthew Barker and Matteo Leonetti – Translating Latent State World Model Representations into Natural Language

- 3:00-3:20pm: Jasmine Stone and Ashok Litwin-Kumar – A model of distributed reinforcement learning systems inspired by the Drosophila mushroom body

- 3:20-4:00pm: Angela Radulescu – Attention and affect in human RLDM: insights from computational psychiatry

Poster session

- 4:00-4:10pm: Poster spotlights #3

- 4:30-7:30pm: Poster session #3

Saturday

Session 5: Agency, habits, and biases (session chair: Ian Ballard)

- 9:00-9:40am: Sanne de Wit – Investigating Habit Making and Breaking in Real-World Settings

- 9:40-10am: Kelly Donegan et al. – Compulsivity is associated with an increase in stimulus-response habit learning

- 10:00-10:20am: Carlos Brito and Daniel McNamee – Hierarchical Integration of RL and Cerebellar Control for Robust Flexible Locomotion

- 10:20-10:40am: Sabrina Abram et al. – Agency in action selection and action execution produce distinct biases in decision making

- 10:40-11.00am: David Abel et al. – Agency is Frame-Dependent

Session 6: Offline RL, multi-agent interaction, and decision making (session chair: Roshan Cools)

- 11:30-12:10pm: Xianyuan Zhan – Towards Real-World Deployable Data-Driven Reinforcement Learning

- 12:10-12:30pm: Jordan Lei et al. – Choice and Deliberation in a Strategic Planning Game in Monkeys

- 12:30-1:10pm: Valentin Wyart – Alternatives to exploration? Moving up and down the ladder of causation in humans

Session 7: Foundations of RL algorithms and neural signals (session chair: Sam McDougle)

- 2:00-2:40pm: Doina Precup – On making artificial RL agents closer to natural ones

- 2:40-3:00pm: Michael Bowling and Esraa Elelimy – Rethinking the Foundations for Continual Reinforcement Learning

- 3:00-3:40pm: Nicolas Tritsch – Defining timescales of neuromodulation by dopamine

- 3:40-4:00pm: Margarida Sousa et al. – Learning distributional predictive maps for fast risk-adaptive control

Session 8: Planning (session chair: Anne Collins)

- 4:30-4:50pm: Sixing Chen et al. – Meta-learning of human-like planning strategies

- 4:50-5:30pm: Wei Ji Ma – Human planning and memory in combinatorial games

- 5:30-5:40pm: Closing words